|

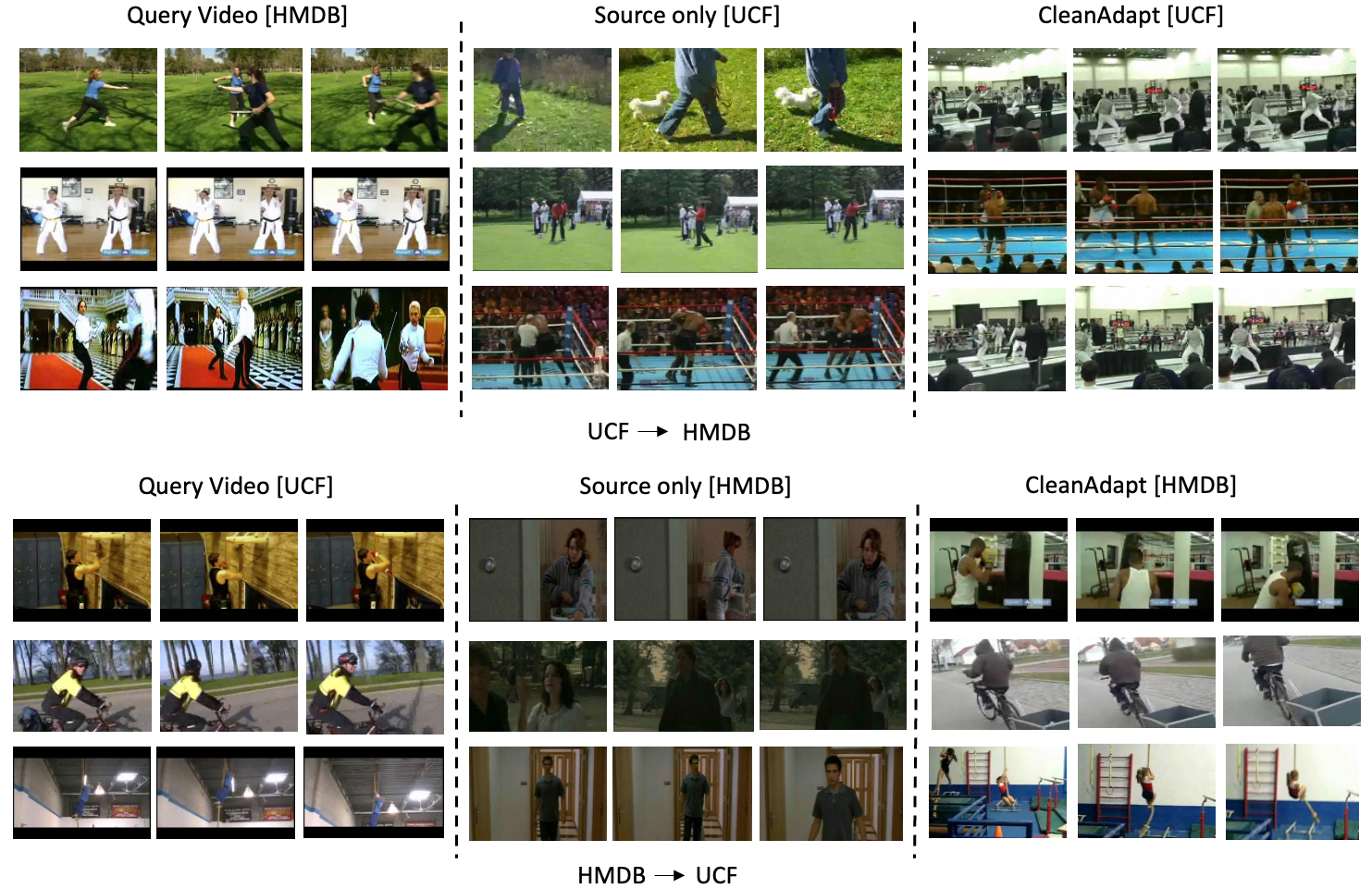

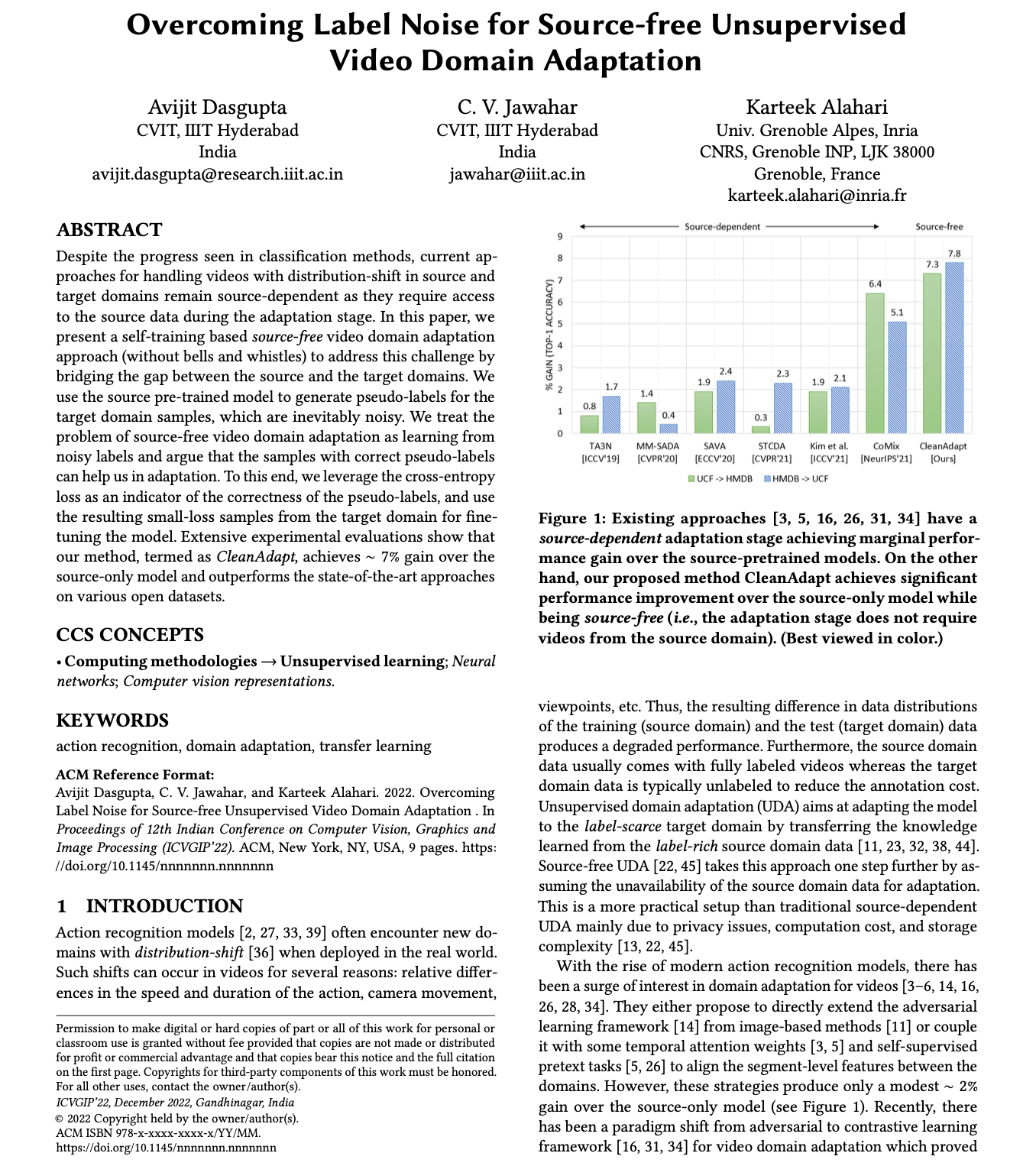

Despite the progress seen in classification methods, current approaches for handling videos with distribution-shift in source and

target domains remain source-dependent as they require access

to the source data during the adaptation stage. In this paper, we

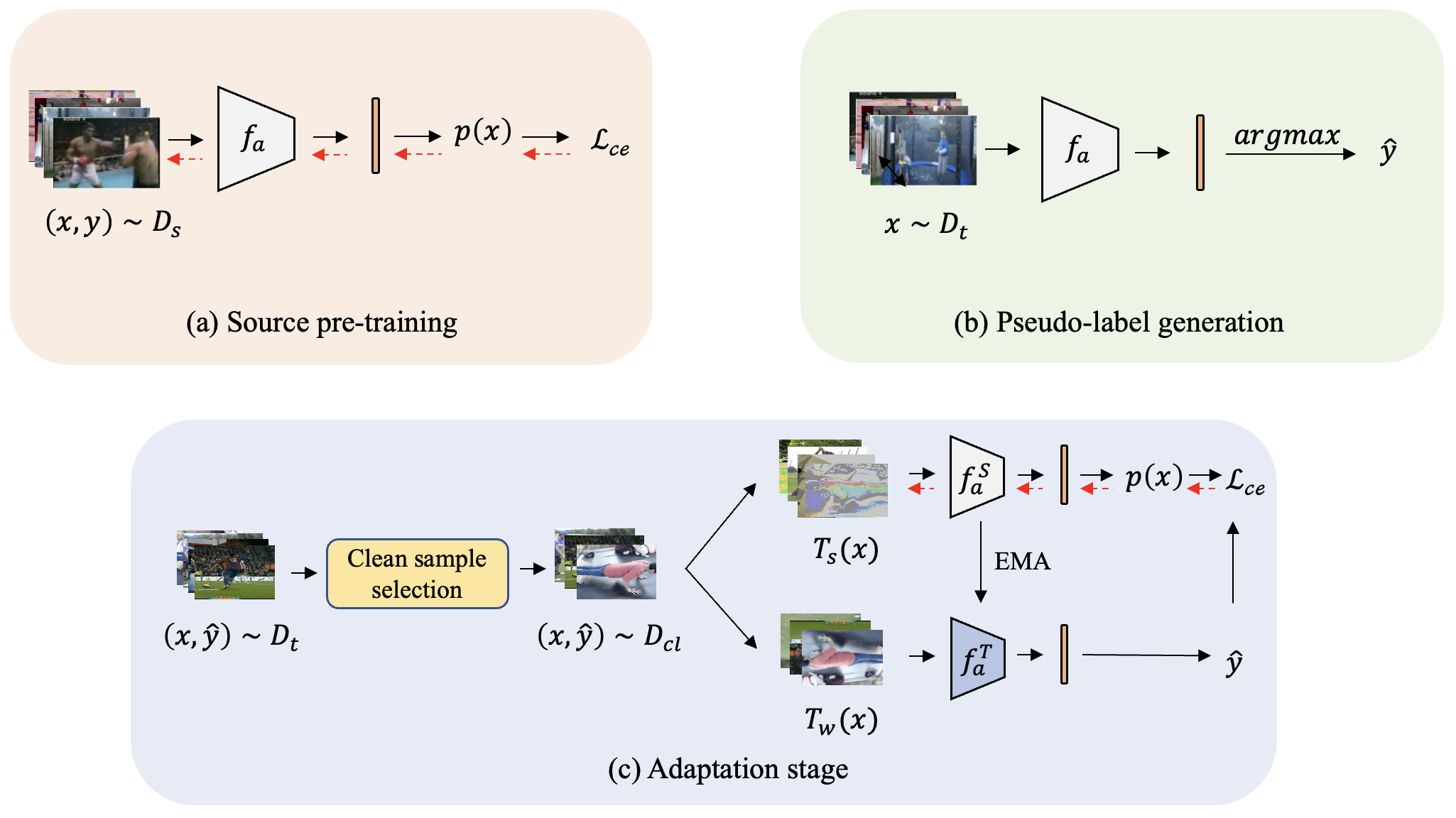

present a self-training based source-free video domain adaptation

approach (without bells and whistles) to address this challenge by

bridging the gap between the source and the target domains. We

use the source pre-trained model to generate pseudo-labels for the

target domain samples, which are inevitably noisy. We treat the

problem of source-free video domain adaptation as learning from

noisy labels and argue that the samples with correct pseudo-labels

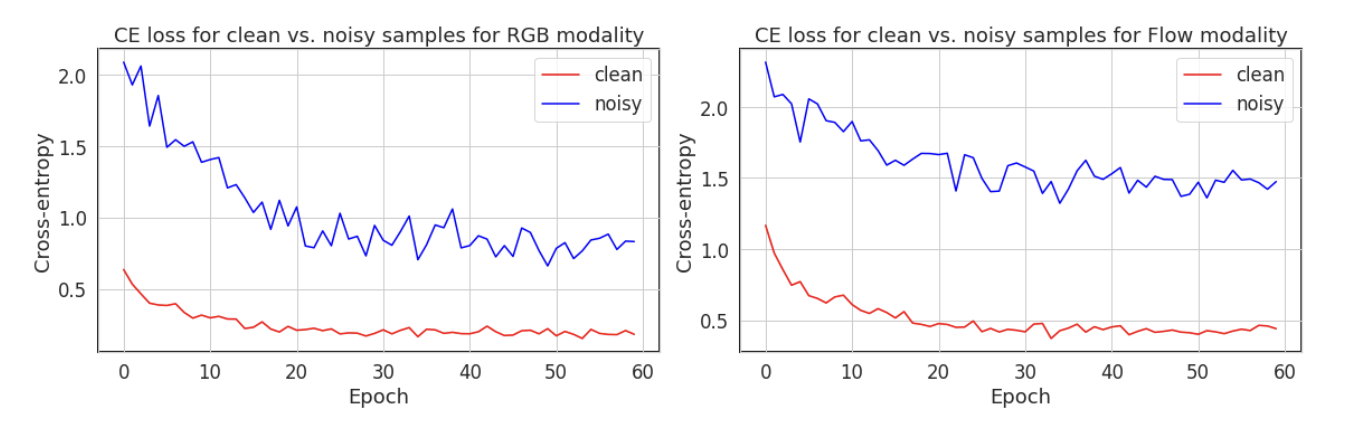

can help us in adaptation. To this end, we leverage the cross-entropy

loss as an indicator of the correctness of the pseudo-labels, and use

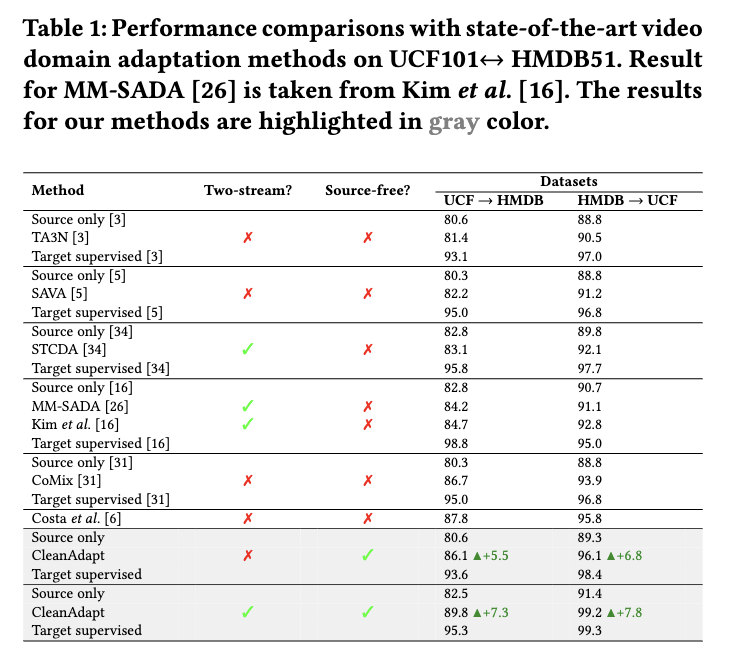

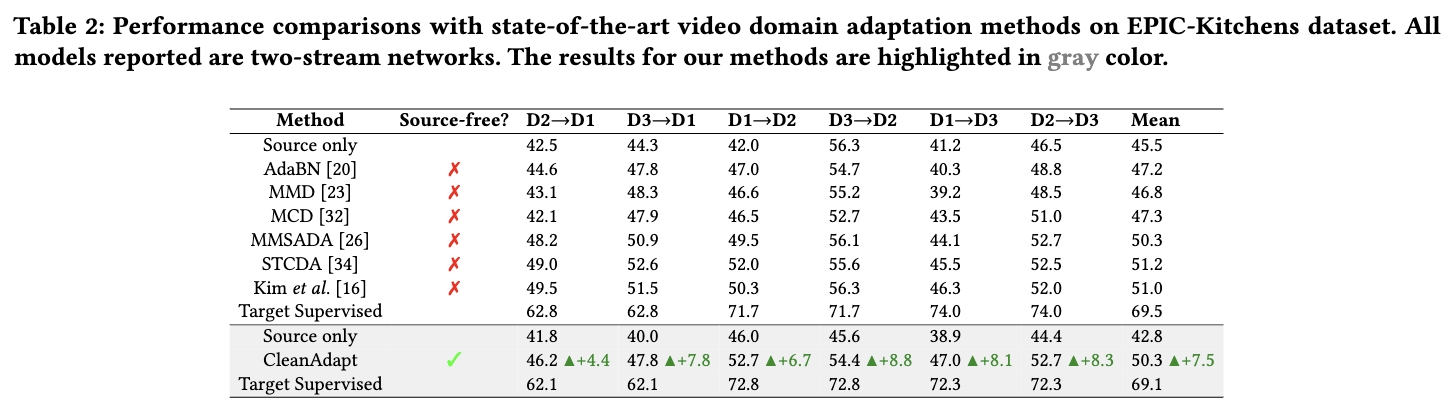

the resulting small-loss samples from the target domain for fine-tuning the model. Extensive experimental evaluations show that

our method, termed as CleanAdapt, achieves ∼ 7% gain over the

source-only model and outperforms the state-of-the-art approaches

on various open datasets.

|